Land Safely! Daedalean’s Visual Landing System

15th July 2021

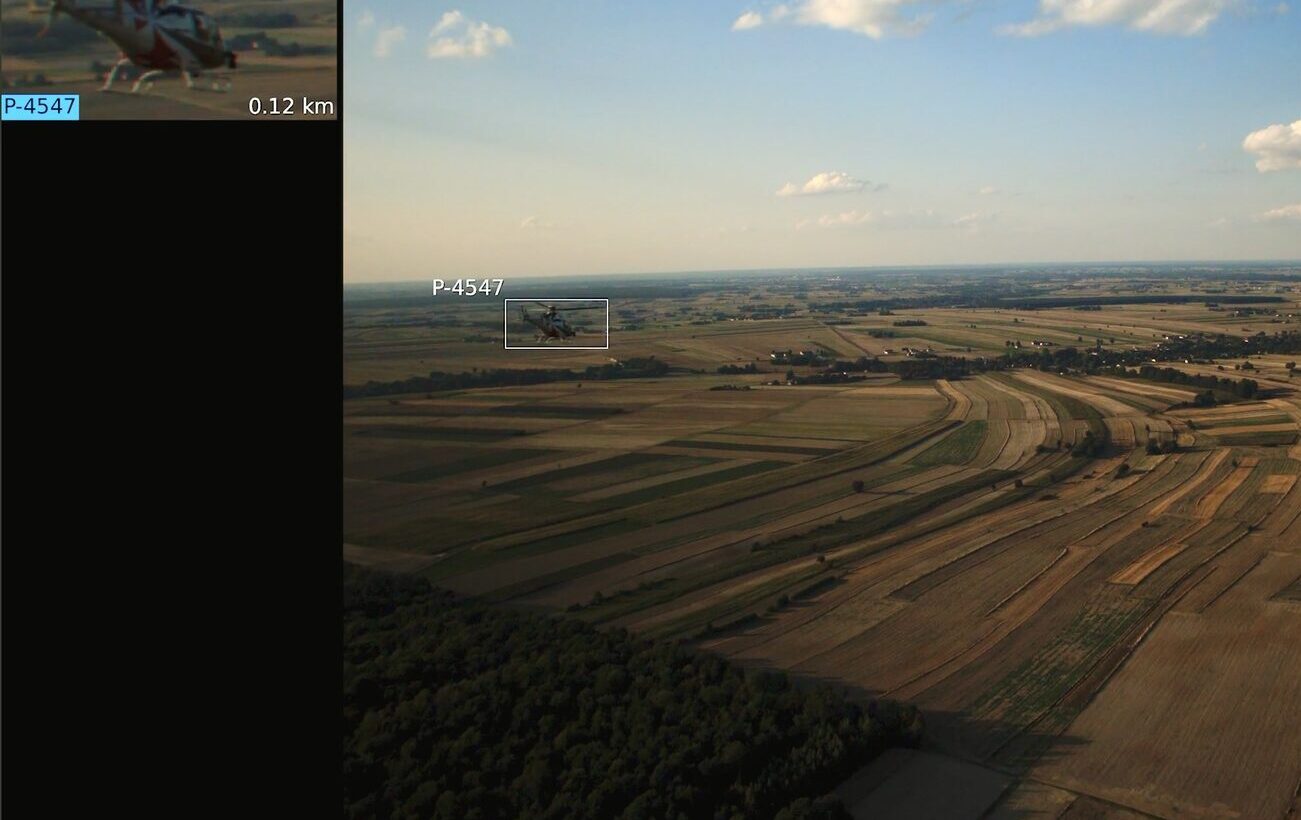

Visual landing is one of the building bricks for the future full autonomy; but today, VLS is aimed at serving as pilot assistance on fixed-wing aircraft and rotorcraft. It is able to find a runway from a far distance, define the ownship position relative to it and do so till touch-down. Airspeeds and approach paths are typical for the Part 91 operations (General Aviation).

Watch this video from March 2021: it shows 8 landings conducted during one day on a partner’s aircraft in Florida. There, we tested our system’s performance in various conditions. First, in the video, some landings are marked as “trained runway” and others as “untrained runway”. “Trained” means seen by VLS before this flight while the neural network was trained, and so the neural network knew its parameters. The “untrained” was never seen by the system. The VLS’ performance achieved on the untrained runway was impressive: spotted from a distance of almost 5 km (3 NM) and then calculated the correct data till touch-down.

Second, some of the variations in the series of landings were the approach parameters: glideslope angle from 3° to steep; sharp roll manoeuvres (resulting in lateral deviations) in attempts to break the system and make it lose the runway. VLS won.

And finally, the visual conditions: the pilot started in full daylight and had been repeating the landings till sunset. Watch to see how the low sun, the intense sun glare, the twilights and the sunset affected the performance.