Explainable AI: Beware What You Wish For

2nd September 2022

Can ‘Explainable AI’ provide a way to apply DO-178C for the safety assurance of neural networks in aviation applications? We think not, but there are a few subtle misconceptions to be unwrapped.

However, there does exist a different approach for ensuring the reliable performance of machine-learned (‘AI’) components for a new generation of flight control instruments: demonstrating Machine Learning Generalization and quantifying so-called “Domain Gaps”.

This 8-minute video provides a synopsis of the concepts for anyone who has a basic understanding of high school-level math.

00:19 How DO-178 works for the classical software systems in aviation

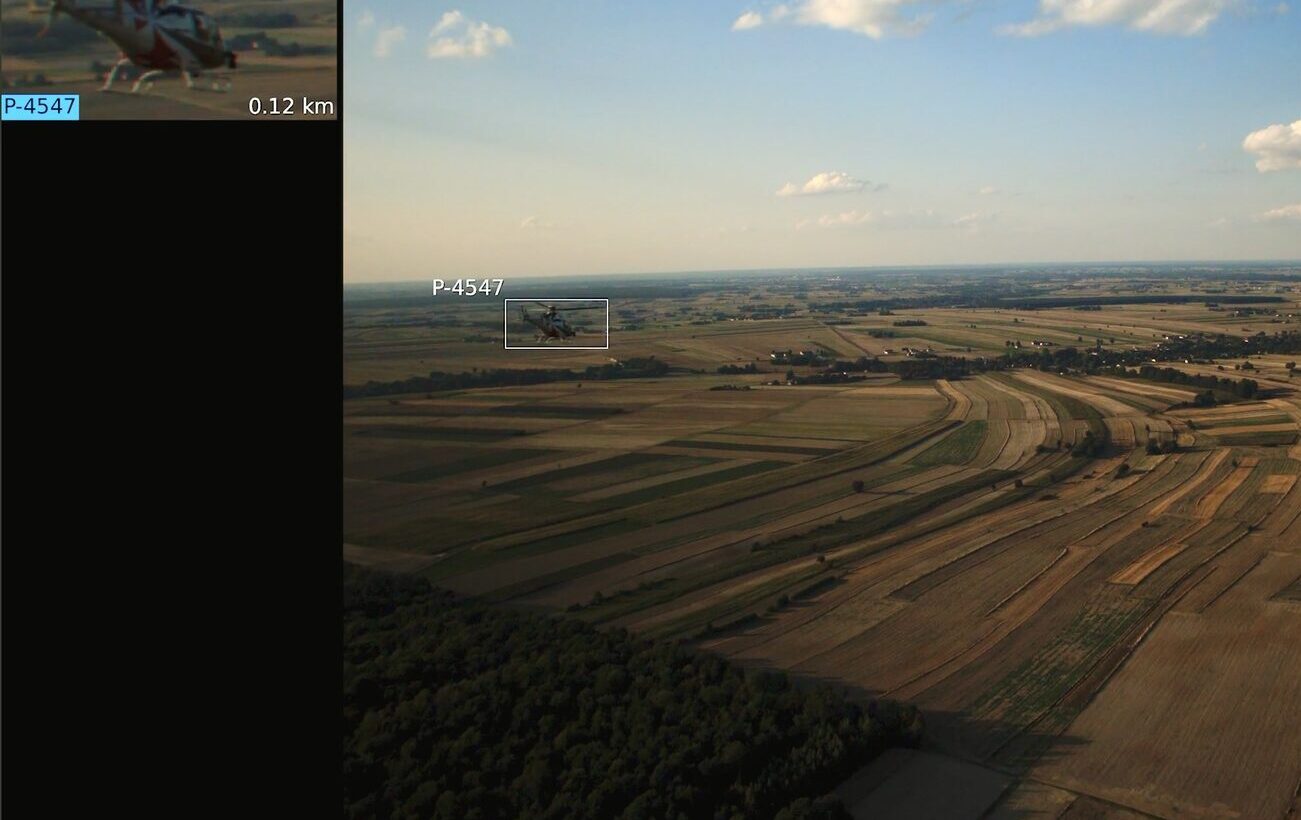

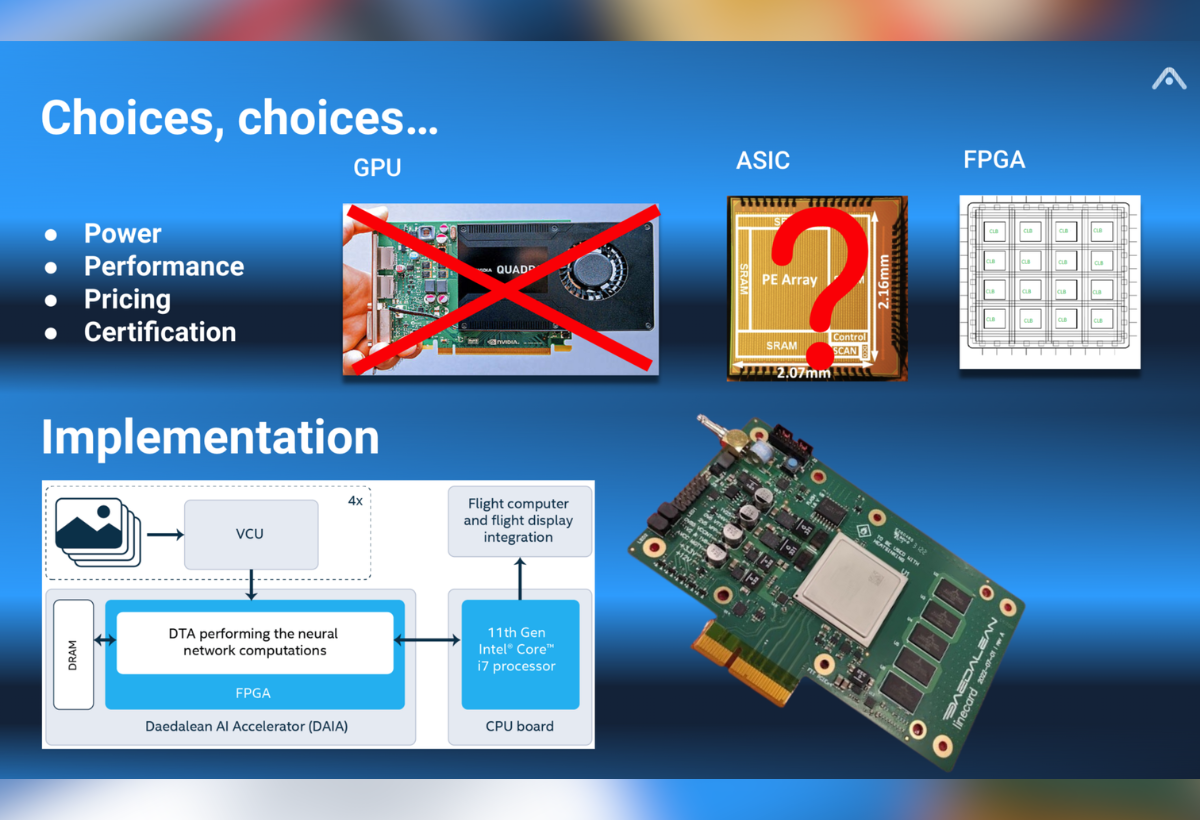

01:00 What is that ‘AI’ you speak of

03:44 Certainty and uncertainty

04:56 Traceability: Beware of what you wish for

06:16 How to design a system with a component working with uncertainty

06:56 Will it work ‘in vivo’ as well as it worked ‘in vitro’?