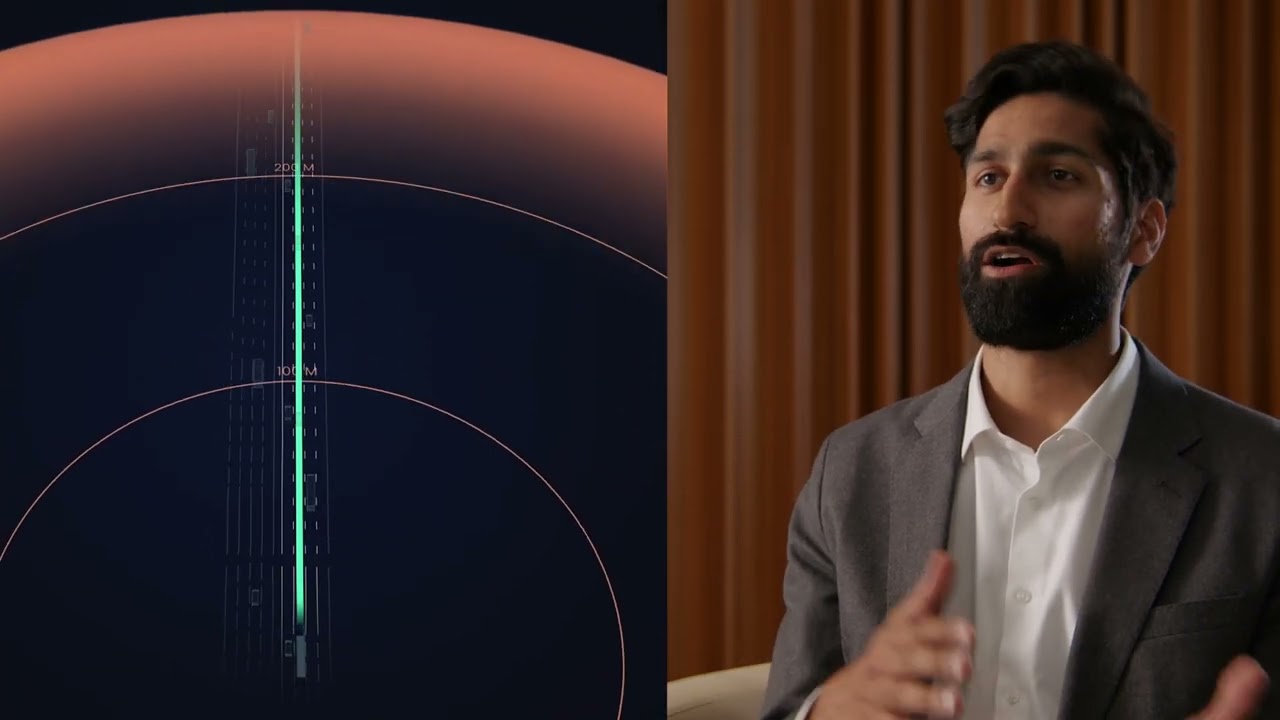

Before making an unprotected turn, the Aurora Driver needs to understand the state of pedestrians, cyclists, changing traffic lights, and other dynamic parts of the environment. This starts with our robust Perception system, which can identify and track objects of all shapes and sizes that move at various speeds.

Here’s an incredibly complex scene from San Francisco. The blue boxes represent vehicles that we’re tracking around us – gold are cyclists, red are pedestrians, and the black vehicle in the center is our car. All of this is overlaid onto raw lidar sensor data. In a complex urban scene like this, Aurora needs to achieve extreme perception accuracy when predicting the state of objects around us — estimating the heading, the size and shape, the category, and the rate that a pedestrian is moving. We need to predict this at centimeter-level accuracy, even coming from 200 meters a way, and we need to do so in all weather- snow, storms, sleet, and more.

Following this scene, you can see examples of unprotected turns where the Aurora Driver reasons about multiple elements in real time.

In the second clip, our Driver waits for the pedestrian already in the crosswalk before proceeding into the intersection as the light turns green. It then finds a safe gap while properly considering all aspects of the scene – including the other pedestrians making their way across the desired path, oncoming vehicles and a bicyclist, and the traffic light turning yellow.

In the third part of the video, you can see the vehicle making a right on red. It correctly advances toward the intersection, mitigating occlusions, and gives the proper right of way to pedestrians and vehicles.

In the final sequence, the car keeps track of oncoming traffic and a pedestrian in the crosswalk as it makes an unprotected left turn.